Become a Validator

Validators are node operators, each storing a copy of the blockchain and performing certain functions to keep the system secure. On The Root Network, validator nodes are responsible for authoring new blocks and participating in the finalization protocol. Validators are an essential part of the Proof-of-Stake consensus mechanism.

Running a validator on a live network is a high-risk, high-reward opportunity. You will be accountable for not only your own stake, but also the stake of your current nominators. If a mistake occurs and your node gets slashed, your tokens and your reputation will be at risk. However, running a validator can also be very rewarding, knowing that you contribute to the security of a decentralized network while growing your rewards.

It is highly recommended that you have significant system administration experience before attempting to run your own validator. You must be able to handle technical issues and anomalies with your node which you must be able to tackle yourself. Being a validator involves more than just executing the The Root Network binary; it requires a comprehensive understanding of system administration to effectively navigate and troubleshoot various challenges.

Requirements

- A non-custodial FuturePass account with at least

5 XRPto pay for 3 transactions and a minimum100_000 ROOTfor staking. - A continuous running node that meets the following requirements:

| Minimum | Recommended | |

|---|---|---|

| CPU |

|

|

| Memory | 4GB | 8GB |

| Storage | 1TB | 1TB |

| Network | Symmetric networking speed at 500 Mbit/s (= 62.5 MB/s) | Symmetric networking speed at 500 Mbit/s (= 62.5 MB/s) |

| System | Linux Kernel 5.16 or newer | Linux Kernel 5.16 or newer |

| AWS Instance Type | c5.large instance | c5.2xlarge instance |

Validators Election Process

Anyone meeting the above requirements can signal their intention to become a validator. However, only a limited number of validator nodes are elected in each staking era (24 hours). The remaining nodes will be on the waiting list until the next era, to rejoin the election process.

The election process follows a set of well-known algorithms in the Substrate blockchain ecosystem to find the most optimal way to satisfy the three metrics below:

- Maximize the total amount at stake.

- Maximize the stake behind the minimally staked validator.

- Minimize the variance of the stake in the set.

The Root Network will have 42 validator slots for the open beta. This number will increase as the network matures.

Setup Instructions

Step 1: Run a validator node

Follow the instructions provided here to have your validator node up and running. It's important to have your validator node setup with telemetry support to ensure that it can be properly monitored.

# Root Mainnet

seed --chain=root --validator \

--name="My TRN Validator Node" \

--sync=warp \

--telemetry-url="wss://telemetry.rootnet.app:9443/submit 0"

# Porcini Testnet

seed --chain=porcini --validator \

--name="My TRN Validator Node" \

--sync=warp \

--telemetry-url="wss://telemetry.rootnet.app:9443/submit 0"

Wait until your node completes the synchronisation before proceeding to the next step. You should see some output similar to the following:

2024-02-08 21:33:18 ⏩ Warping, Importing state, 517.34 Mib (9 peers), best: #0 (0x046e…d338), finalized #0 (0x046e…d338), ⬇ 1.7kiB/s ⬆ 0.8kiB/s

2024-02-08 21:33:23 ⏩ Warping, Importing state, 517.34 Mib (9 peers), best: #0 (0x046e…d338), finalized #0 (0x046e…d338), ⬇ 1.2kiB/s ⬆ 0.6kiB/s

2024-02-08 21:33:28 ⏩ Warping, Importing state, 517.34 Mib (9 peers), best: #10705147 (0xbbd8…77d2), finalized #10705147 (0xbbd8…77d2), ⬇ 1.7kiB/s ⬆ 0.4kiB/s

2024-02-08 21:33:29 Warp sync is complete (517 MiB), restarting block sync.

Step 2: Generate session keys

Run a curl command targeting your node to generate its session keys. This is an important step to link your node with your validator account.

curl -H "Content-Type: application/json" -d '{"id":1, "jsonrpc":"2.0", "method": "author_rotateKeys", "params": []}' localhost:9933

Update the "localhost:9933" to your node host name accordingly.

Once the command is executed successfully, you will see a JSON response like this:

{

"id": 1,

"jsonrpc": "2.0",

"result": "0xded0a4c6440f2b93f2fdc56c80b9a0adb58a6f64157f8a62c12f8cea61c0582c26475545399e236f732a2cc542a8938dbd3d51acbf6dd3fa26b129c549e5283f7d95650ed6948767d2871ba000b0a2618857fb43f9dc2462adbd62c4eb323ee9034c5fff514f25a1befa6409650ed35e056c88c900fc1e52775c831e7a7f92b1c2"

}

The session keys is in the "result" field of the response, leave the terminal open with the response, as we will need to use it in Step 5.

Step 3: Connect FuturePass EOA account with the Portal

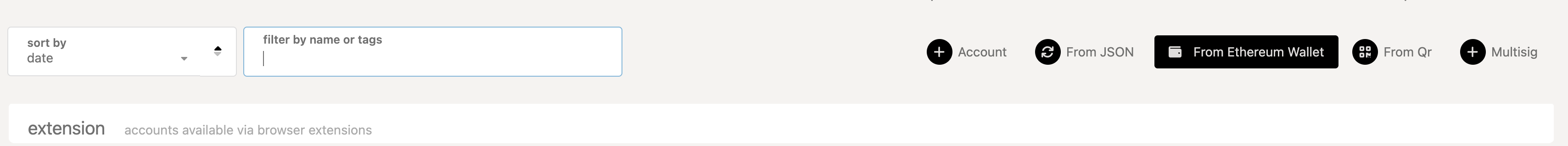

- Go to the Portal Account page and select From Ethereum Wallet.

-

Select the EOA account with FuturePass that you want to as validator when the “Connect with MetaMask” dialog opens up and click Next.

-

Confirm both your EOA account and FuturePass account appears on the Account page.

Step 4: Stake the required ROOT amount

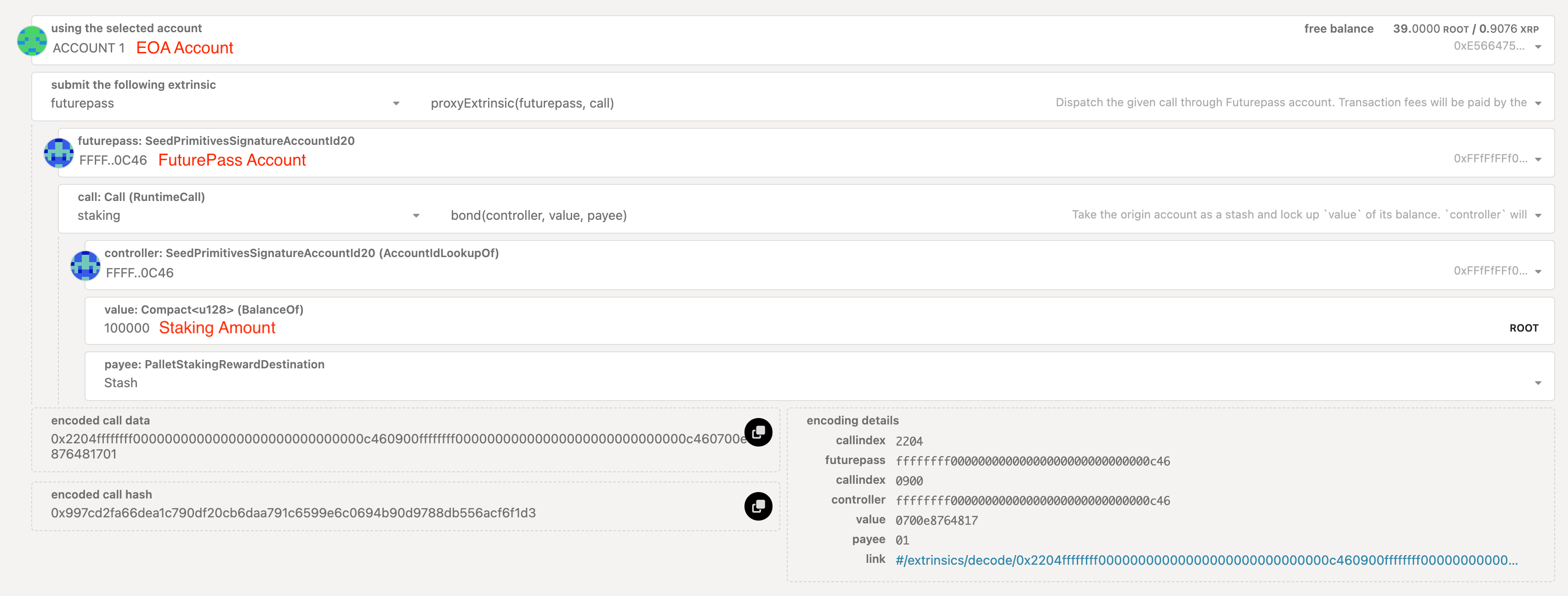

- Go to the Portal Extrinsics page and input the values as per the screenshot below:

- Click Submit Transaction when done and select Sign when prompted by MetaMask.

- The transaction is confirmed when a green tick appears on the top right corner.

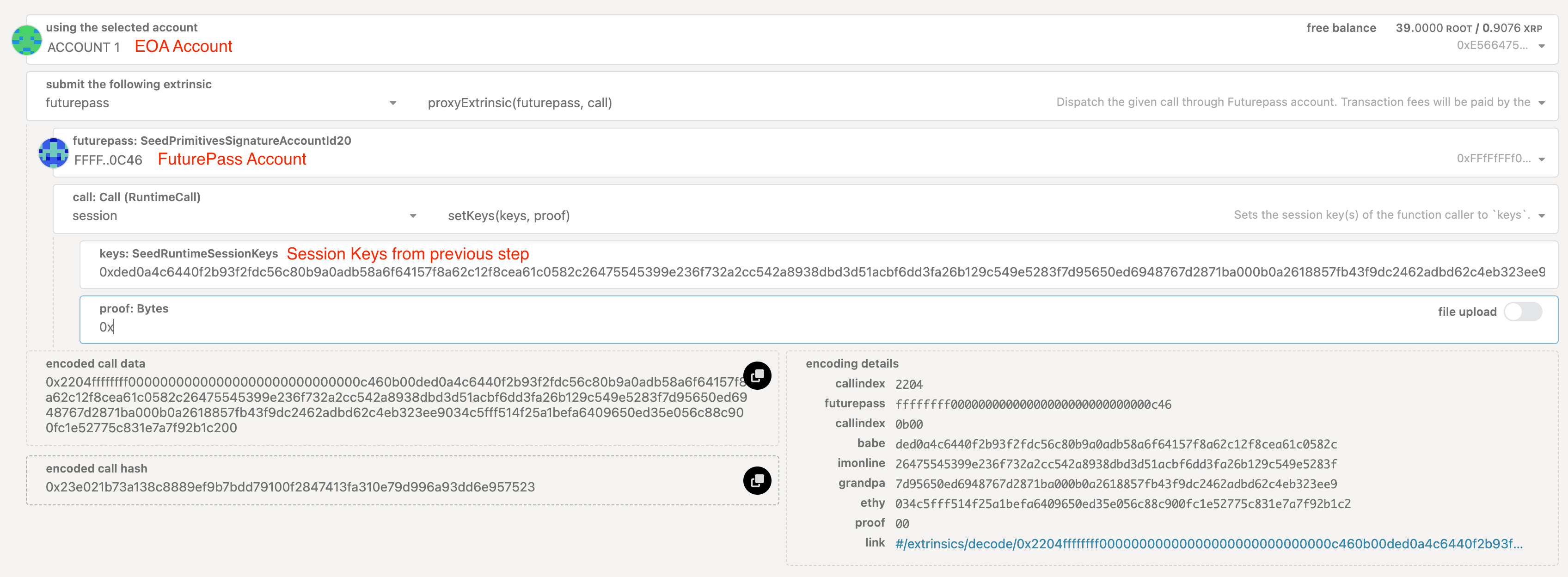

Step 5: Set session keys from your node

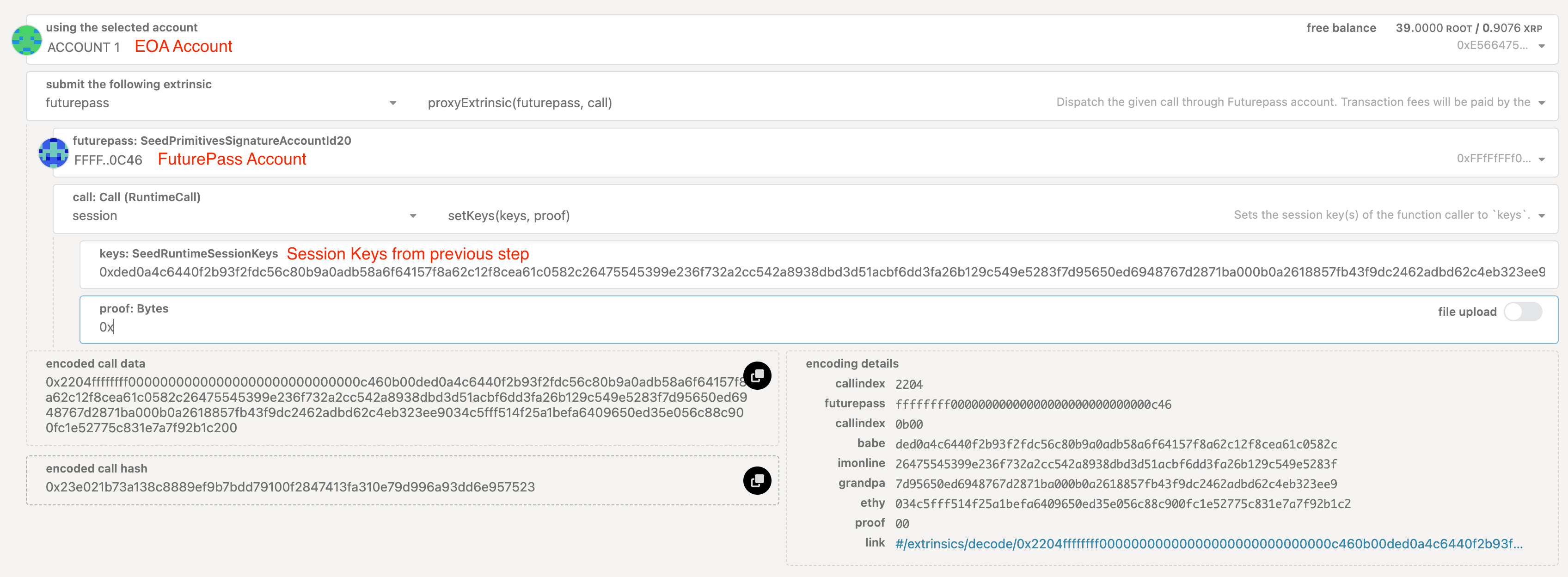

- Go to the Portal Extrinsics page and input the values as per the screenshot below, with the session keys value from Step 2.

- Click Submit Transaction when done and select Sign when prompted by MetaMask.

- The transaction is confirmed when a green tick appears on the top right corner.

Step 6: Request to validate

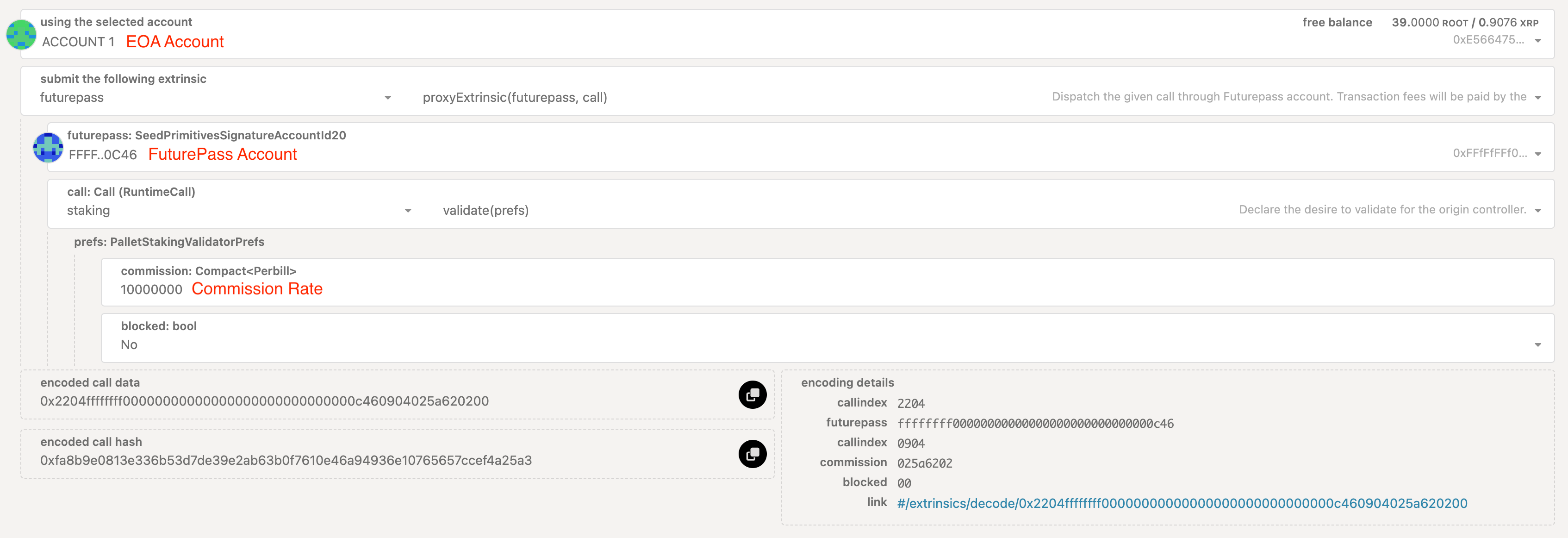

- Go to the Portal Extrinsics page and input the values as per the screenshot below, with the session keys value from Step 2.

Commission rate is per billion, that means 10000000 = 1%, 100000000 = 10%.

- Click Submit Transaction when done and select Sign when prompted by MetaMask.

- The transaction is confirmed when a green tick appears on the top right corner.

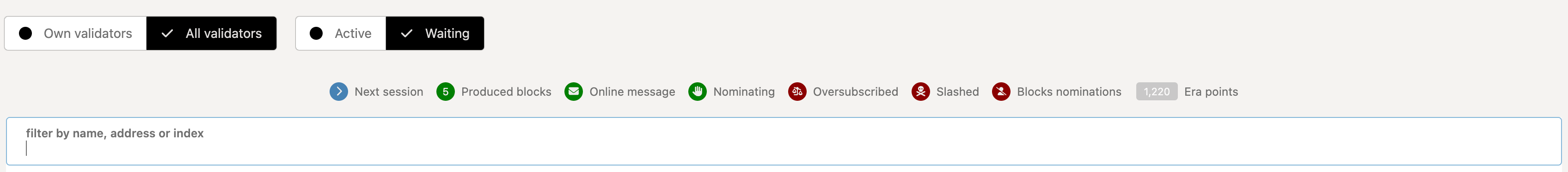

Final Step: Confirm your validator node is in Waiting list

Go to the Portal Staking page and select the Waiting tab and confirm your FuturePass account is on the list, with the correct commission rate:

That’s it, the only thing left is to wait until the next era, and see if your validator is elected to be part of the active validators list.

A single FuturePass account can only be either a validator or a nominator. Avoid using the same FuturePass account to nominate after you've completed the above steps, the Staking protocol will change your role back to nominator at the end of the current era. You will need to repeat these steps again to switch back to be a validator.

Upgrade Instructions

Quick Upgrade

Validators perform critical functions for the network, and as such, have a strict uptime requirement. Validators may have to go offline for short periods to upgrade client software or the host machine. Usually, standard client upgrades will only require you to stop the service, replace the binary (or the Docker container) and restart the service. This operation can be executed within a session (4 hours) with minimum downtime.

Docker Container

For a node running in Docker container, follow these steps to upgrade your node client version:

- Stop and rename the Docker container so it can be re-created again with the latest image.

docker stop <CONTAINER_ID>

docker rename <CONTAINER_ID> prev-<CONTAINER_ID>

- Pull the latest Docker image from Github:

docker image rm ghcr.io/futureversecom/seed:latest

docker image pull ghcr.io/futureversecom/seed:latest

- Re-create the validator container again using the latest image:

docker run ...

Re-creating the Docker container will not cause your node to re-sync from block 0 again, as long as your Docker container is setup with correct volume mapping as per the instructions.

- Confirm your node is working correctly and running the latest version on Telemetry, then remove the old container:

docker rm prev-<CONTAINER_ID>

Binary Source

For a node running using binary built from the source, you can follow the steps here to re-build the binary at the latest version and restart your node again.

Long-lead Upgrade

Validators may also need to perform long-lead maintenance tasks that will span more than one session. Under these circumstances, an active validator may choose to chill their stash, perform the maintenance and request to validate again. Alternatively, the validator may substitute the active validator server with another allowing the former to undergo maintenance activities with zero downtime.

This section will provide an option to seamlessly substitute Node A, an active validator server, with Node B, a substitute validator node, to allow for upgrade/maintenance operations for Node A.

Step 1: At Session N

- Follow step 1 and 2 from the Setup Instructions above to setup the new Node B.

- Go to the Portal Extrinsics page and input the values as per the screenshot below, with the session keys value from step 2 of the Setup Instructions.

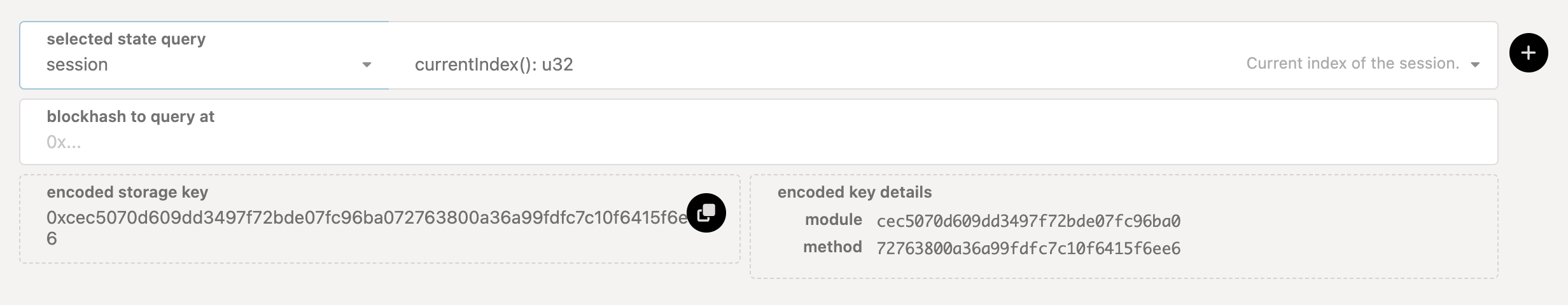

- Go to the the Portal Chain State page, query the

session.currentIndex()to take note of the session that this extrinsic was executed in.

- Allow the current session to elapse and then wait for two full sessions.

You must keep Node A running during this time. session.setKeys does not have an immediate effect and requires two full sessions to elapse before it does. If you do switch off Node A too early you may risk being removed from active set.

Step 2: At Session N+3

Verify that Node A has changed the authority to Node B by inspecting its log with messages like the ones below:

2024-02-29 21:28:26 👴 Applying authority set change scheduled at block #541

2024-02-29 21:28:26 👴 Applying GRANDPA set change to new set...

And in Node B, you should see log messages like these:

2024-02-29 20:32:04 🙌 Starting consensus session on top of parent 0xa692ad56e2fb5601fc04e4e9cd41615b227fef0e93129601c5143ba8c723291c

2024-02-29 20:32:04 🎁 Prepared block for proposing at 12055 (3 ms) [hash: 0x8f1496f960e7e32a952fcbc335eb188c776e147e8facf3b7ca2aeb12f2c2a82c; parent_hash: 0xa692…291c; extrinsics (1): [0x47ac…c7f3]]

2024-02-29 20:32:04 🔖 Pre-sealed block for proposal at 12055. Hash now 0xe3dbc6f48223029d0e0d281c3c05994beebb9fca4b5e8a0befdb4938b36133b2, previously 0x8f1496f960e7e32a952fcbc335eb188c776e147e8facf3b7ca2aeb12f2c2a82c.

Once confirmed, you can safely perform any maintenance operations on Node A and switch back to it by following the step above for Node A. Again, don't forget to keep Node B running until the current session finishes and two additional full sessions have elapsed.

Best Practices to Avoid Slashing

Slashing is implemented as a deterrent for validators to misbehave. Slashes are applied to a validator’s total stake (own + nominated) and can range from as little as 0.01% or rise to 100%. In all instances, slashes are accompanied by a loss of nominators.

A slash may occur under four circumstances:

-

Unresponsiveness – Slashing starts when 10% of the active validators set are offline and increases in a linear manner until 44% of the validator set is offline; at this point, the slash is held at 7%.

-

Equivocation – A slash of 0.01% is applied with as little as a single evocation. The slashed amount increases to 100% incrementally as more validators also equivocate.

-

Malicious Action – This may result from a validator trying to represent the contents of a block falsely. Slashing penalties of 100% may apply.

-

Application Related (bug or otherwise) – The amount is unknown and may manifest as scenarios 1, 2, and 3 above.

This section provides some best practices to prevent slashing based on lessons learned from previous slashes from Polkadot network. It provides comments and guidance for all circumstances except for malicious action by the node operator.

Unresponsiveness

An offline event occurs when a validator does not produce a BLOCK or IMONLINE message within an EPOCH. Isolated offline events do not result in a slash; however, the validator would not earn any work points while offline. A slash for unresponsiveness occurs when 10% or more of the active validators are offline at the same time.

The following are recommendations to validators to avoid slashing under liveliness for servers that have historically functioned:

- Utilize systems to host your validator instance. Systemd should be configured to auto reboot the service with a minimum 60-second delay. This configuration should aid with re-establishing the instance under isolated failures with the binary.

- A validator instance can demonstrate un-lively behaviour if it cannot sync new blocks. This may result from insufficient disk space or a corrupt database.

- Monitoring should be implemented that allows node operators to monitor connectivity network connectivity to the peer-to-peer port of the validator instance. Additionally, monitoring should ensure that there is a drift of less than 50 blocks between the target and best blocks. If either event produces a failure, the node operator should be notified.

The following are recommendations to validators to avoid liveliness for new servers / migrated servers:

- Ensure that the

--validatorflag is used when starting the validator instance. - If switching keys, ensure that the correct session keys are applied.

- If migrating using a two-server approach, ensure that you don’t switch off the original server too soon.

- Ensure that the database on the new server is fully synchronized.

- It is highly recommended to avoid hosting on providers that other validators may also utilize. If the provider fails, there is a probability that one or more other validators would also fail due to liveliness building to a slash.

Equivocation

Equivocation events can occur when a validator produces two or more identical blocks; in this case, it is called a BABE equivocation. Equivocation may also happen when a validator signs two or more of the identical consensus votes; in this case, it is called a GRANDPA Equivocation. Equivocations usually occur when duplicate signing keys reside on the validator host. If keys are never duplicated, the probability of an equivocation slash decreases to near 0.

The following are scenarios that build towards slashes under equivocation:

- Cloning a server, i.e., copying all contents when migrating to new hardware, should be avoided. If an image is desired, it should be taken before keys are generated.

- High Availability (HA) Systems – Equivocation can occur if there are any concurrent operations, either when a failed server restarts or if false positive event results in both servers being online simultaneously. HA systems are to be treated with extreme caution and are not advised.

- The keystore folder is copied when attempting to copy a database from one instance to another.

It is important to note that equivocation slashes occur with a single incident. This can happen if duplicated key stores are used for only a few seconds.

Application Related

The following are advised to node operators to ensure that they obtain pristine binaries or source code and to ensure the security of their node:

- Always download either source files or docker image from the official Root Network repository

- Verify the hash of downloaded files.

- Use the W3F secure validator setup or adhere to its principles

- Ensure that essential security items are checked, including the use of a firewall, proper management of user access, and the implementation of SSH certificates.

- Avoid using your server as a general-purpose system. Hosting a validator on your workstation or one that hosts other services increases the risk of maleficence.